Phase-III Image Search

- mbbhavana9

- Nov 30, 2019

- 1 min read

Updated: Dec 1, 2019

In this module to find an image you have to search with any text, image relevant to text will be displayed.

Image captioning is generating textual description of an image. Attention based model is used for generating captions. This uses tf.keras and eager execution. Captions are generated using TensorFlow in google Colab.

This model on execution downloads MS-COCO data set, reprocesses and cache subset of images using inception V3, an encoder-decoder model is trained and used to generate captions for new images.

Contributions:

Uploaded images to google drive and wrote script to generate urls of images in excel. Used the CSV file of image urls to generate captions.

As shown above used pandas to read data from CSV file.

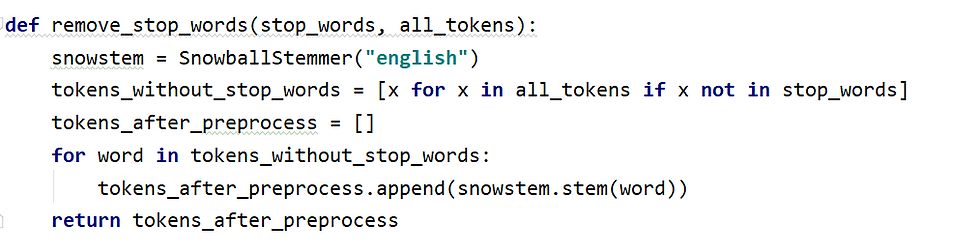

Similar to Phase-1 search, performed preprocessing on captions generated above. And computed TF-IDF for all the terms in data. We are using cosine similarity to generate images with captions that match the query terms.

Challenges:

Training the image captioning model and generating captions took lot of time.

My data set has 8k images. There ware operational challenges as google colab was disconnecting after generating captions for 2k documents. Therfore I had to split data set into 2k documents. In this process GPU run-time exceeded google colab 12 hour runtime limit on VM. As all the previous trained data is lost, I had to rerun the training model which took additional 3 hrs for training and more than an hour for generating captions.

Reference:

Comments